Welcome to SySNestor, where we strive to simplify complex tech processes for our readers. In this article, we’ll guide you through the installation of K3s, a lightweight and easy-to-use Kubernetes distribution, on the latest Ubuntu 23. By the end of this tutorial, you’ll have a fully functional k3s cluster up and running, ready to empower your container orchestration journey.

Key Features of K3s on Ubuntu:

- Lightweight Architecture:

- K3s is known for its minimalistic footprint, making it well-suited for environments with limited resources.

- The binary size is significantly smaller compared to standard Kubernetes, making it easier to deploy and manage.

- Quick Installation:

- K3s can be installed with a single command, reducing the barrier to entry for users new to Kubernetes.

- The simplified installation process makes it ideal for development and testing scenarios.

- Reduced Resource Usage:

- K3s optimizes resource usage without compromising essential Kubernetes functionalities.

- Its streamlined nature doesn’t sacrifice performance, making it a powerful solution for various use cases.

Advantages of K3s:

- Ease of Deployment:

- K3s simplifies the Kubernetes deployment process, allowing users to set up clusters quickly and efficiently.

- This ease of deployment is particularly beneficial for developers, enabling them to focus on application development rather than intricate cluster configurations.

- Resource Efficiency:

- With its lightweight design, K3s thrives in resource-constrained environments, making it an excellent choice for edge computing and IoT scenarios.

- The reduced resource overhead doesn’t compromise on the scalability and flexibility inherent in Kubernetes.

- Simplified Maintenance:

- K3s comes with a straightforward upgrade and maintenance process, reducing the operational burden on administrators.

- Automated operations and a minimalistic approach to cluster management contribute to a smoother maintenance experience.

- Versatility in Use Cases:

- K3s is versatile and can be employed across various use cases, from development and testing environments to production deployments in edge computing scenarios.

- Its adaptability makes it a valuable tool for organizations with diverse infrastructure needs.

Prerequisites for Installation

- Ensure that no two nodes share the same hostname. It is imperative to maintain unique hostnames within the cluster.

- In scenarios where multiple nodes might have identical hostnames or if hostnames are subject to reuse by an automated provisioning system, utilize the –with-node-id option. This option appends a random suffix for each node, or alternatively, devise a distinctive name to pass with –node-name or $K3S_NODE_NAME for every node added to the cluster.

Architecture:

K3s supports the following architectures:

- x86_64

- armhf

- arm64/aarch64

- s390x

ARM64 PAGE SIZE:

For releases prior to May 2023 (v1.24.14+k3s1, v1.25.10+k3s1, v1.26.5+k3s1, v1.27.2+k3s1) on aarch64/arm64 systems, the kernel must utilize 4k pages. RHEL9, Ubuntu, Raspberry Pi OS, and SLES meet this requirement.

Operating Systems:

K3s is designed to operate on most contemporary Linux systems.

Certain operating systems may necessitate additional setup:

- Red Hat Enterprise Linux / CentOS / Fedora

- Ubuntu / Debian

- Raspberry Pi

Note: Older Debian releases may encounter a known iptables bug; refer to Known Issues for details.

In this tutorial we will use Ubuntu 23

It is advisable to disable ufw (uncomplicated firewall):

ufw disableIf you opt to keep ufw enabled, the following rules are mandatory by default:

ufw allow 6443/tcp #apiserver

ufw allow from 10.42.0.0/16 to any #pods

ufw allow from 10.43.0.0/16 to any #servicesThe hardware requirements for K3s vary based on the scale of your deployments, with the minimum and recommended specifications outlined as follows:

| Spec | Minimum | Recommended |

|---|---|---|

| CPU | 1 core | 2 cores |

| RAM | 512 MB | 1 GB |

Resource Profiling captures test results to determine the minimum resource requirements for different K3s components, such as the agent, server with a workload, and server with one agent. It also includes an analysis of the factors impacting server and agent utilization, along with strategies for protecting the cluster datastore from interference.

Raspberry Pi and Embedded etcd:

When deploying K3s with embedded etcd on a Raspberry Pi, it’s advisable to use an external SSD. This recommendation arises from the write-intensive nature of etcd, which SD cards may struggle to handle due to the IO load.

Disks:

K3s performance is closely tied to database performance. To ensure optimal speed, using an SSD is recommended. Disk performance may vary for ARM devices using an SD card or eMMC.

Networking:

- The K3s server requires port 6443 to be accessible by all nodes.

- For nodes using the Flannel VXLAN backend, they need to reach other nodes over UDP port 8472. If using the Flannel WireGuard backend, UDP port 51820 (and 51821 for IPv6) is required. K3s utilizes reverse tunneling, with nodes making outbound connections to the server, and all kubelet traffic passing through that tunnel. If a custom CNI is used instead of Flannel, the ports needed by Flannel are not required by K3s.

- If the metrics server is utilized, all nodes must be accessible to each other on port 10250.

- For achieving high availability with embedded etcd, server nodes must be accessible to each other on ports 2379 and 2380.

Inbound Rules for K3s Nodes:

| Protocol | Port | Source | Destination | Description |

|---|---|---|---|---|

| TCP | 2379-2380 | Servers | Servers | Required only for HA with embedded etcd |

| TCP | 6443 | Agents | Servers | K3s supervisor and Kubernetes API Server |

| UDP | 8472 | All nodes | All nodes | Required only for Flannel VXLAN |

| TCP | 10250 | All nodes | All nodes | Kubelet metrics |

| UDP | 51820 | All nodes | All nodes | Required only for Flannel Wireguard with IPv4 |

| UDP | 51821 | All nodes | All nodes | Required only for Flannel Wireguard with IPv6 |

| TCP | 5001 | All nodes | All nodes | Required only for embedded distributed registry (Spegel) |

| TCP | 6443 | All nodes | All nodes | Required only for embedded distributed registry (Spegel) |

Typically, all outbound traffic is allowed. Additional firewall changes may be necessary based on the operating system used.

Large Clusters:

For large-scale K3s clusters, hardware requirements are tailored to the cluster size. A high-availability setup with an external database is recommended for production environments, with suggested options being MySQL, PostgreSQL, or etcd.

Minimum CPU and memory requirements for nodes in a high-availability K3s server based on deployment size:

| Deployment Size | Nodes | VCPUs | RAM |

|---|---|---|---|

| Small | Up to 10 | 2 | 4 GB |

| Medium | Up to 100 | 4 | 8 GB |

| Large | Up to 250 | 8 | 16 GB |

| X-Large | Up to 500 | 16 | 32 GB |

| XX-Large | 500+ | 32 | 64 GB |

Adjustments may be needed based on specific requirements and usage patterns in large-scale deployments.

Installing K3s on Ubuntu 23

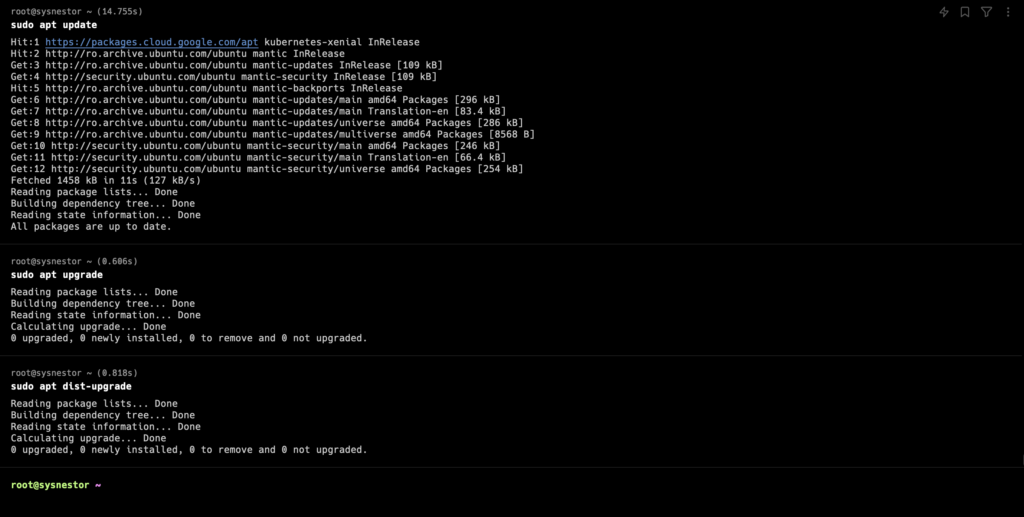

Ensure that your Ubuntu 23 system is up-to-date with the latest packages and security patches. Use the following commands in your terminal:

sudo apt update

sudo apt upgrade

sudo apt dist-upgrade

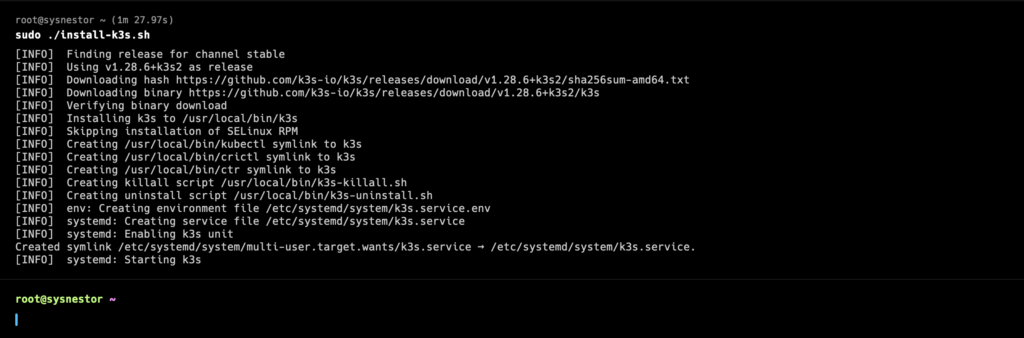

a. Downloading the k3s Installation Script:

Retrieve the k3s installation script by using the following command:

curl -sfL https://get.k3s.io -o install-k3s.shb. Executing the Installation Script:

Run the installation script with the following command:

chmod +x install-k3s.sh

sudo ./install-k3s.sh

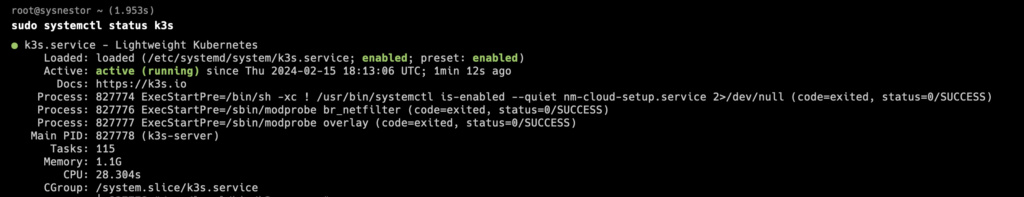

c. Verifying the Installation:

Confirm that k3s has been installed successfully by checking the status:

sudo systemctl status k3s

kubectl get nodes

sudo cp /etc/rancher/k3s/k3s.yaml ~/.kube/config

sudo chown $(id -u):$(id -g) ~/.kube/configConfiguring k3s for Your Environment:

a. Customizing k3s Settings:

Modify k3s settings as per your requirements. The primary configuration file is typically located at /etc/rancher/k3s/config.yaml. Edit this file using your preferred text editor.

b. Securing Your k3s Cluster:

Ensure that your k3s cluster is secure by following best practices. This may include adjusting access controls, setting up authentication, and enabling encryption. Refer to the official k3s documentation for detailed guidance.

Exploring k3s Functionality:

a. Deploying Your First Application:

Use kubectl, the Kubernetes command-line tool, to deploy your first application. For example, you can deploy a simple Nginx web server:

kubectl create deployment nginx --image=nginxTroubleshooting Common Issues

Check k3s Service Status:

Verify that the k3s service is running correctly. Use the following command to check its status:

sudo systemctl status k3sLook for any error messages or warnings that might indicate issues with the service.

Examine Logs:

Review the logs for k3s components to identify any error messages or warnings. Logs are typically located in /var/log/k3s/. You can use the following command to view logs:

sudo journalctl -u k3sInspect kubelet Logs:

Check kubelet logs for potential issues. Use the following command:

sudo journalctl -u kubeletCheck Node Status:

Ensure that all nodes in your cluster are in a ready state. Use the following command:

kubectl get nodesIf nodes are not ready, investigate the underlying issues.

Verify Network Configuration:

Confirm that the networking components (e.g., Flannel) are working as expected. Check the status of network pods:

kubectl get pods -n kube-systemInvestigate any pod failures or issues.

Examine Resource Utilization:

Inspect resource utilization on your nodes. High CPU or memory usage could indicate performance issues. Use tools like top or kubectl top nodes to check resource usage.

Check Storage and Disk Space:

Verify that there is sufficient disk space on your nodes, especially if running applications with persistent storage. Insufficient space can lead to pod failures.

Review Firewall and Network Rules:

Ensure that firewall rules and network configurations permit the required communication between nodes. Refer to the inbound rules mentioned earlier for necessary ports.

Inspect Container Logs:

Examine logs of specific containers to identify issues. Use the following command to view container logs:

kubectl logs -cVerify DNS Resolution:

Ensure that DNS resolution is working correctly within the cluster. Test DNS queries from pods to external domains and vice versa.

Check Kubernetes API Server:

Confirm that the Kubernetes API server is accessible. Verify the status of the API server:

kubectl cluster-infoUpdate k3s:

Ensure that you are using the latest version of k3s. Updating to the latest release might resolve known issues:

sudo k3s server --docker --no-deploy traefik --disable servicelbCongratulations! You’ve successfully followed our comprehensive guide on installing k3s on Ubuntu 23. We hope this article has demystified the process for you, allowing you to harness the power of Kubernetes with ease. Stay tuned to SySNestor for more tech tutorials and guides to simplify your journey in the ever-evolving world of technology.

Comments (10)