Welcome to the dynamic realm of modern application deployment, where innovation is not just a buzzword but a necessity. In this fast-paced digital era, orchestrating and managing containerized applications efficiently has become a cornerstone of success. Enter Kubernetes – the game-changer in the world of container orchestration.

Introduction to Kubernetes

Kubernetes, commonly shortened to K8s, is a container orchestration platform that’s open-source. It handles tasks such as deploying, scaling, and managing applications in containers. It originated from the necessity to simplify the challenges linked with overseeing containers on a large scale. Over time, Kubernetes has grown into a reliable solution, gaining popularity among both major tech companies and emerging startups.

Key Components

At the heart of Kubernetes are its key components, each playing a crucial role in ensuring seamless application deployment:

- Nodes: These are the physical or virtual machines that form the cluster. Nodes are responsible for running applications and hosting the containers.

- Pods: The smallest deployable units in Kubernetes, pods encapsulate one or more containers, sharing the same network namespace and storage.

- Master Node: The brain of the Kubernetes cluster, the master node manages and oversees the entire orchestration process, including scheduling, scaling, and monitoring.

- Kubelet: Running on each node, Kubelet ensures that containers are running in a Pod.

- Controller Manager: It ensures that the desired state of the cluster matches the actual state.

- etcd: A distributed key-value store that acts as the cluster’s brain, storing configuration data.

Significance in Modern Application Deployment:

Scalability and Efficiency: Kubernetes empowers businesses to scale applications effortlessly, responding to varying workloads in real-time. Its auto-scaling capabilities ensure optimal resource utilization, enhancing efficiency and reducing operational costs.

High Availability: By distributing applications across multiple nodes, Kubernetes ensures high availability, minimizing downtime and enhancing the overall reliability of your applications.

Declarative Configuration: With Kubernetes, you can define the desired state of your applications and infrastructure through declarative configuration files. This simplifies deployment, updates, and rollbacks, providing a clear and reproducible process.

Ecosystem Integration: Kubernetes boasts a vibrant ecosystem with a plethora of tools and extensions, fostering seamless integration with various cloud providers, monitoring solutions, and CI/CD pipelines.

System Requirements for Kubernetes on Ubuntu 23

Ubuntu 23, the latest gem in the Ubuntu family, brings forth a myriad of features and improvements. Before we embark on our Kubernetes voyage, let’s ensure a seamless marriage between this cutting-edge operating system and the container orchestration powerhouse.

Master Node:

- CPU: 2 cores or more

- RAM: 2 GB or more

- Disk: 20 GB or more

Worker Node:

- CPU: 1 core or more

- RAM: 1 GB or more

- Disk: 20 GB or more

These are basic requirements for a small-scale or development setup. For production environments or larger deployments, you’ll need to scale up the resources accordingly. Keep in mind that the actual requirements may vary based on factors such as the number of nodes, the complexity of your applications, and the traffic they generate.

Why Docker is a Prerequisite for Kubernetes

Before we set the Docker containers afloat, let’s understand why Docker is the trusty first mate for Kubernetes:

Docker Containers: Kubernetes thrives on container orchestration, and Docker provides the perfect vessel for these containers. It ensures consistency across different environments, making it an ideal choice for packaging and distributing applications.

Isolation and Efficiency: Docker brings the magic of containerization to the table, offering lightweight, isolated environments for applications. This isolation allows Kubernetes to manage and scale these containers effortlessly, creating a harmonious dance of efficiency.

Compatibility and Ecosystem: Kubernetes and Docker share a robust compatibility, with Kubernetes natively supporting Docker containers. Additionally, Docker boasts a vibrant ecosystem of pre-built images and tools, making it a seamless fit for Kubernetes deployments.

Step-by-Step Guide on Installing Docker on Ubuntu 23

Now that we’ve laid the groundwork, let’s dive into the installation process:

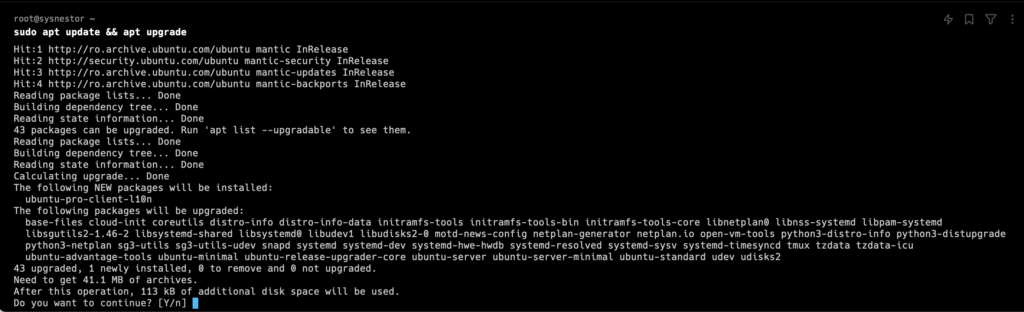

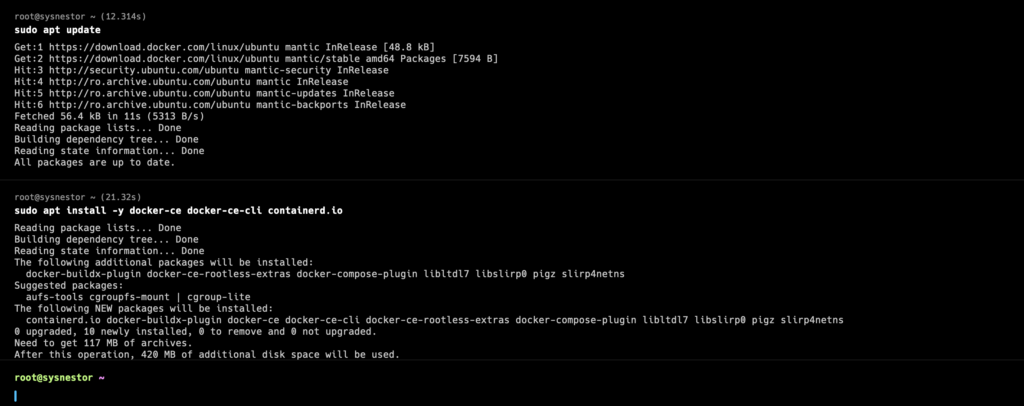

Step 1: Update System Repositories

sudo apt update && apt upgrade

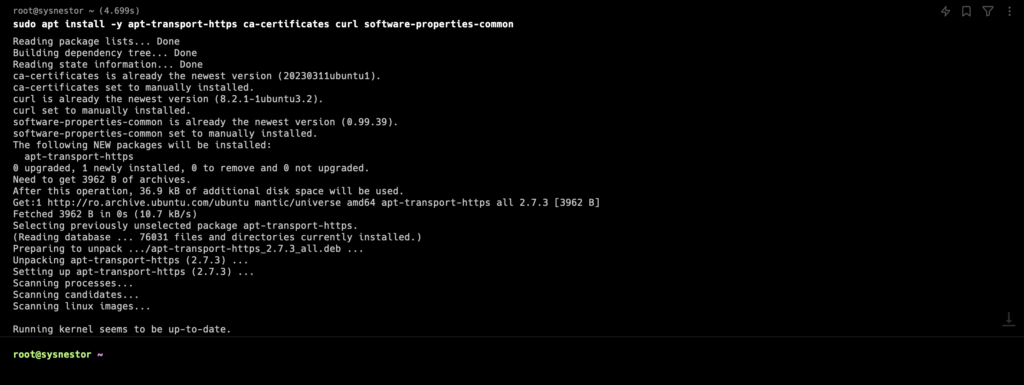

Step 2: Install Docker Dependencies

sudo apt install -y apt-transport-https ca-certificates curl software-properties-common

Step 3: Add Docker GPG Key

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo gpg --dearmor -o /usr/share/keyrings/docker-archive-keyring.gpgStep 4: Set Up Docker Repository

echo "deb [signed-by=/usr/share/keyrings/docker-archive-keyring.gpg] https://download.docker.com/linux/ubuntu $(lsb_release -cs) stable" | sudo tee /etc/apt/sources.list.d/docker.list > /dev/nullStep 5: Install Docker Engine

sudo apt update

sudo apt install -y docker-ce docker-ce-cli containerd.io

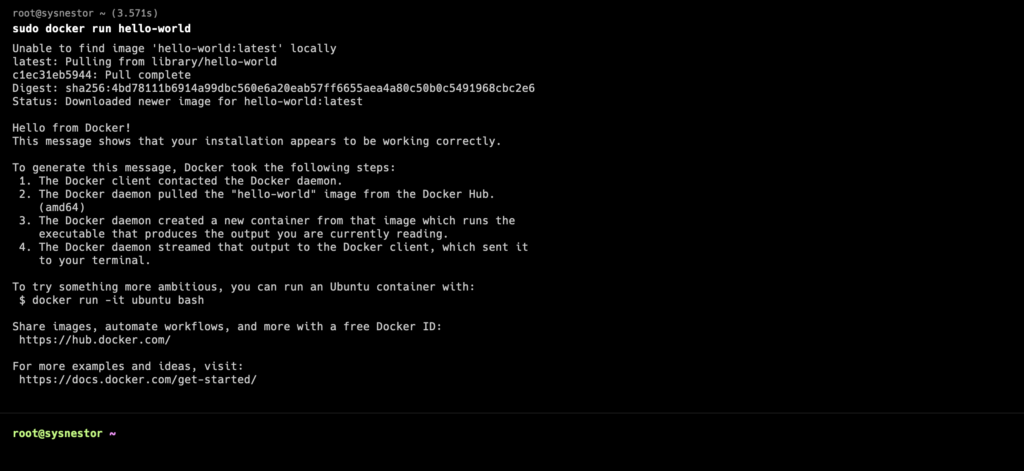

Step 6: Verify Docker Installation

sudo docker run hello-world

Step-by-Step Guide Using kubeadm on Ubuntu 23

Step 1: Add Kubernetes GPG key

sudo curl -s https://packages.cloud.google.com/apt/doc/apt-key.gpg | sudo gpg --dearmour -o /etc/apt/trusted.gpg.d/kubernetes-xenial.gpgStep 2: Add Kubernetes apt repository

sudo apt-add-repository "deb http://apt.kubernetes.io/ kubernetes-xenial main"Step 3: Install kubeadm, kubelet, and kubectl

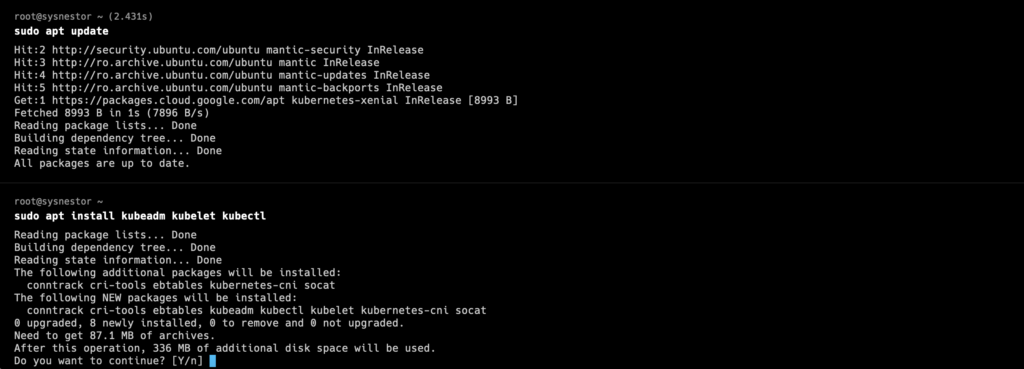

sudo apt update

sudo apt install kubeadm kubelet kubectl

sudo apt-mark hold kubelet kubeadm kubectl

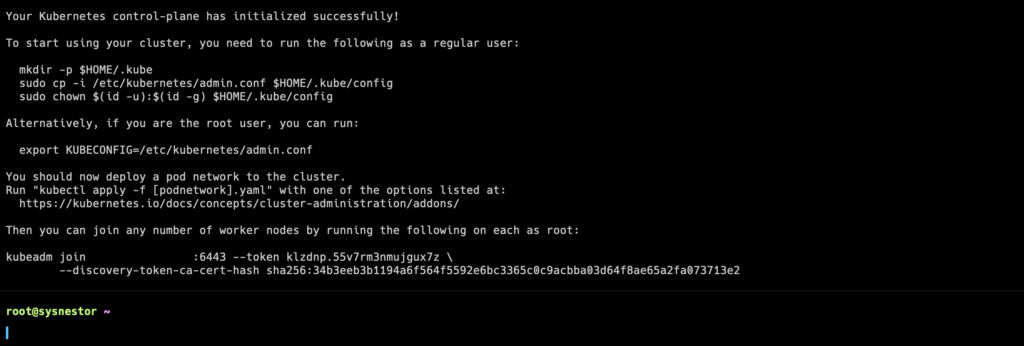

Step 4: Initialize the Kubernetes Control Plane

sudo kubeadm init

Debugging

If you will encounter the following error message here is a hotfix:

sudo kubeadm init

I0214 18:02:41.106245 22276 version.go:256] remote version is much newer: v1.29.2; falling back to: stable-1.28

[init] Using Kubernetes version: v1.28.7

[preflight] Running pre-flight checks

[WARNING Swap]: swap is enabled; production deployments should disable swap unless testing the NodeSwap feature gate of the kubelet

error execution phase preflight: [preflight] Some fatal errors occurred:

[ERROR CRI]: container runtime is not running: output: time="2024-02-14T18:02:41Z" level=fatal msg="validate service connection: CRI v1 runtime API is not implemented for endpoint \"unix:///var/run/containerd/containerd.sock\": rpc error: code = Unimplemented desc = unknown service runtime.v1.RuntimeService"

, error: exit status 1

[preflight] If you know what you are doing, you can make a check non-fatal with `--ignore-preflight-errors=...`

To see the stack trace of this error execute with --v=5 or highersudo swapoff -a

sudo sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab

rm /etc/containerd/config.toml

systemctl restart containerd

kubeadm initStep 5: Set Up Kubernetes Configuration for the User

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/configStep 7: Join Nodes to the Cluster (if applicable)

kubeadm join <control-plane-host>:<control-plane-port> --token <token> --discovery-token-ca-cert-hash sha256:<hash>

Comments (1)